What does that mean and imply?

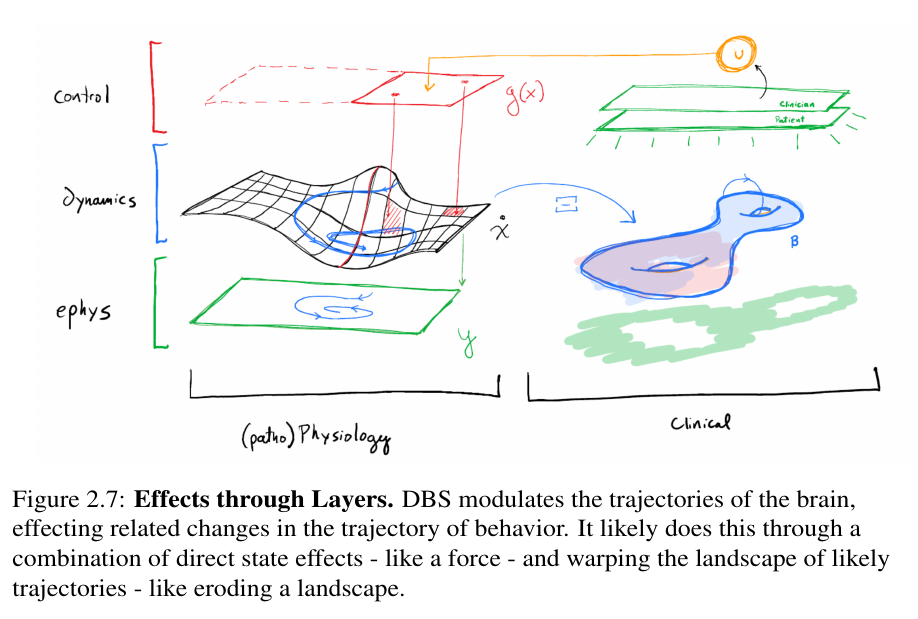

Neuromodulation is applied control theory - it always has been. Arguably, it always will be - the goal is to control a state or symptom of a patient in their context 1.

For various reasons, we’ve forgotten this. And I think we’re losing efficacy, efficiency, and ethics of inference. Instead of reverse engineering, we’re dissecting - and losing the phenomena we’re fascinated with in the process.

Here are some loose thoughts that I need to organize for a bigger, more coherent post.

General Thoughts

Neuromodulation has always been “do whatever it takes to alleviate symptoms, then figure out why your intervention worked”. This is not rigorous science - which is good actually. It’s a clinician operating with various models in their head, predicting interventions that will work, being partly right, partly wrong, and then tightening the whole thing over extreme clinical cases that have an imperative to act.

Vanilla Scientific Method/Rigor considers it a virtue to change only one variable at a time to ensure causal interpretability. Control Theory is all about achieving a specific state, and changing any/all variables to achieve that state.

SM is obsessed with isolating variables, running perfectly measured experiments in large samples, rigorously showing an IV causes changes in DV. We’re supposed to forget that this is a conditional inference that only applies when the IV and DV are isolated - which happens all the time in the real world…

There are warped funding incentives where the mainly biostatisticians in charge will be very reluctant to adopt CT since (a) CT is so drastically different in its approach than reductionist empiricism, and (b) if they admit CT is a more appropriate frame, they lose their claim that they are the best people to give neuromodulation grant money to.

IV->DV base model is oversimplified. The CT base model has more pieces that we know neuromodulation involves, and is arguably the minimal viable expressivity to capture all the things we care about.

Adaptive DBS is, identically, a control theoretic problem - I might even say it’s a domain-application of canonical CT. Doing Adaptive DBS without formal training in control theory is like building a bridge without formal training (and licensure) in civil engineering.

Dynamics often gets averaged out in rigorous science - time is seen as a statistical sample with the fragile assumption of stationarity - but it’s arguably the entire point of what we’re doing. CT is intrinsically dynamical, it isn’t optional. Backing out of averages of averages typical of reductionist science/NHST is not trivial - arguably we have to pull all the way back to get the raw data, in which case why not just do it with the right framework a priori?

Reductionist experiments won’t help much - because what do they tell us about how our intervention will behave when all other variables are chaotically dancing? ↩︎