Brain Circuit Maps

I did my PhD with one of the pioneers of Deep Brain Stimulation - Dr. Helen Mayberg. Her current research center is called “Center for Advanced Circuit Therapeutics” at Mt. Sinai SOM in NYC.

What, exactly, a “brain circuit” is has been a topic she and I have talked about for over a decade now 1. My background is as an electrical engineer and medicine, so I’ve got some (apparently unique) thoughts…

One of the key misconceptions I’ve seen emerge in “brain circuits” is missing the possibility of “two hit” (patho)physiology. Let’s see what that means here.

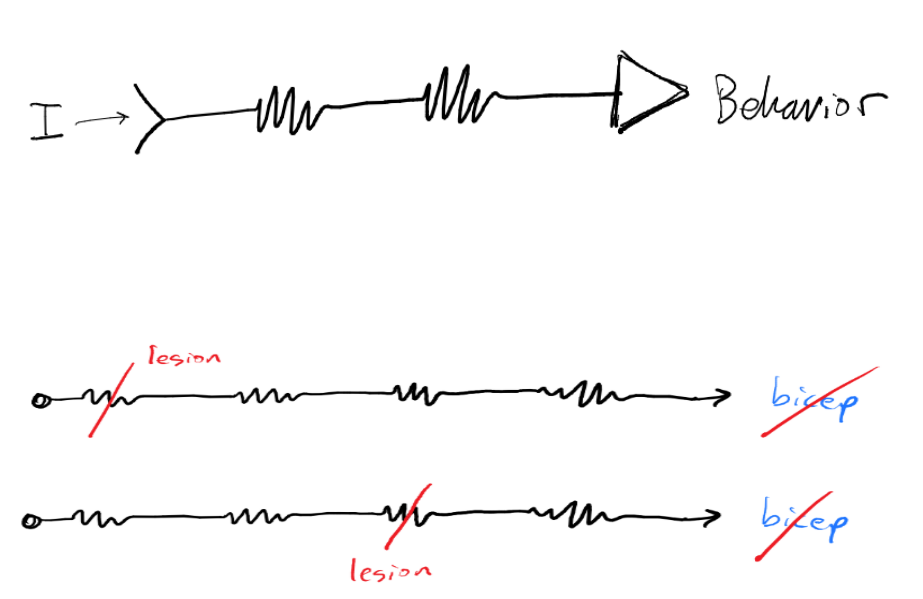

Circuits drive behaviors.

Let’s start with an axiom - circuits drive behaviors.

In the circuit above, if you remove resistors $R_1$ or $R_2$ then the behavior $\beta_1$ doesn’t get any pseudocurrent. So behavior $\beta_1$ goes away.

But what about a slightly more complex circuit?

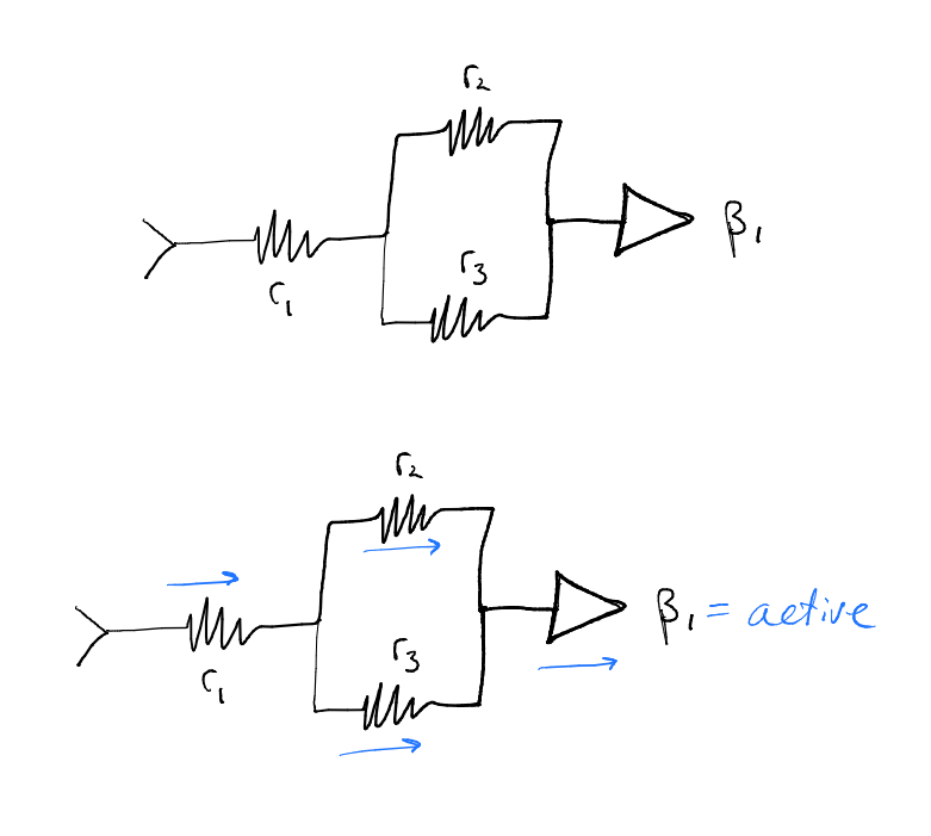

Parallels and Redundancies

Let’s define a different circuit - one with a parallel (sub)system.

Here, we can see that knocking out $R_1$ would break current to $\beta_1$.

But knocking out $R_2$ would leave $\beta_1$ intact. Same with knocking out $R_3$.

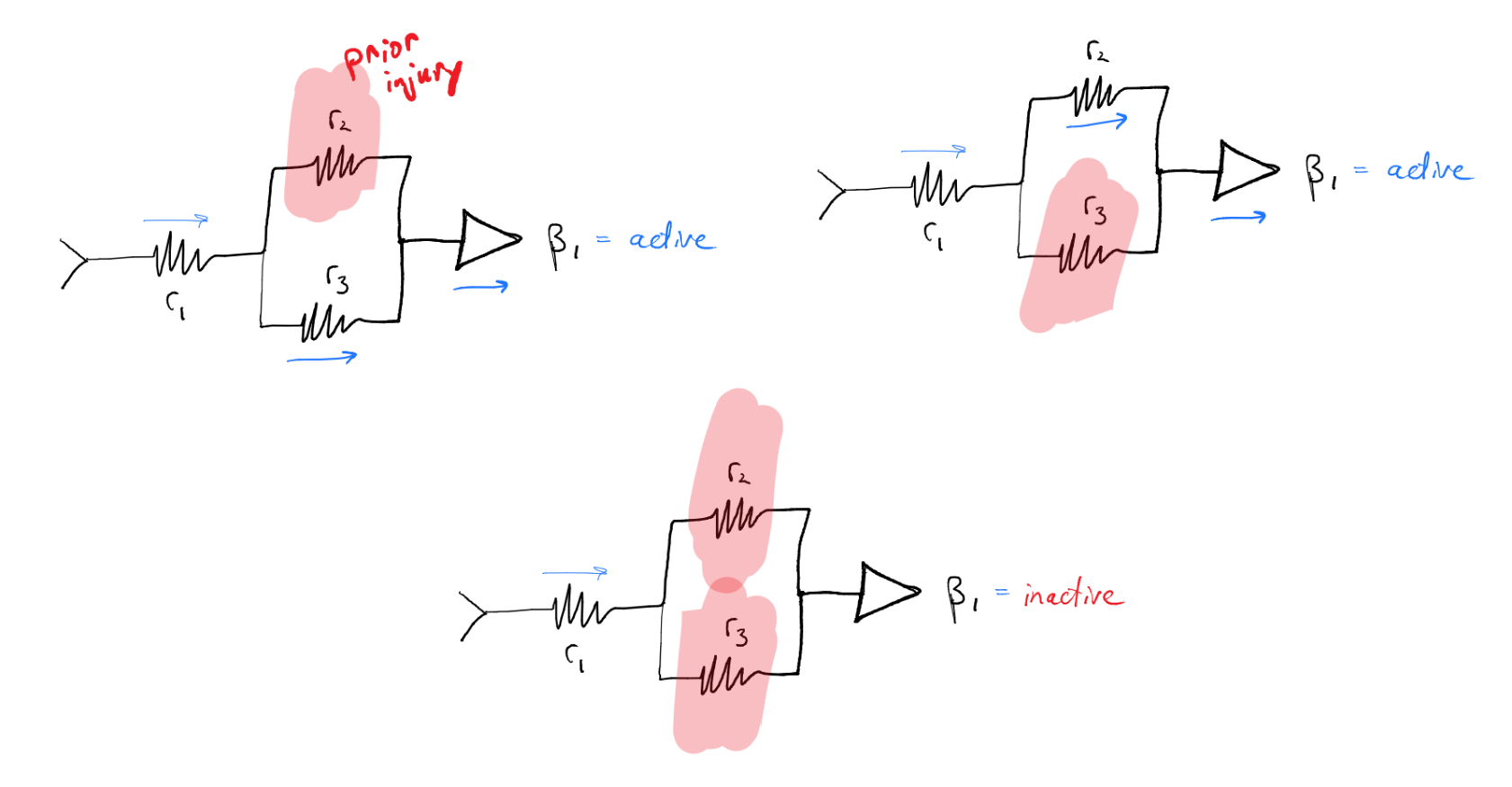

We may be tempted to conlcude that $R_2$ and $R_3$ are not related to $\beta_1$. Except what if a novel patient that walks into your clinic already has an injury at one of those “unrelated” resistors?

Now we suddenly see that knocking out $R_2$ will definitely lead to a loss of $\beta_1$.

This is a “two hit” circuit that we need to learn to capture - but this effect isn’t a simple relationship 2.

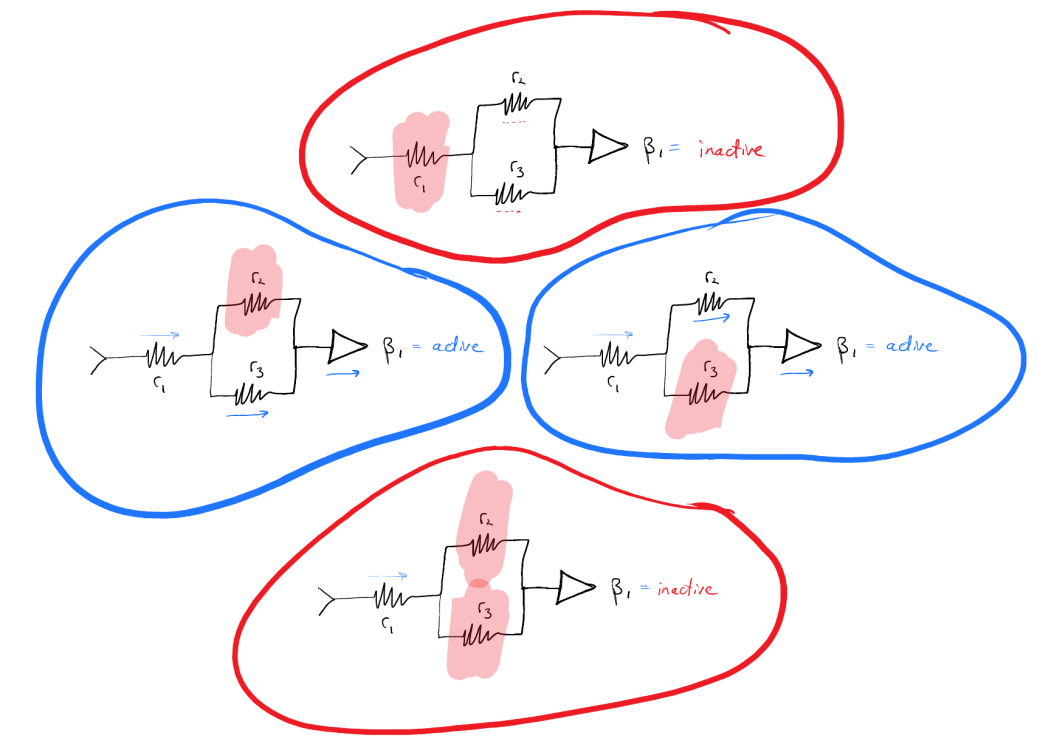

Parting Thoughts

The brain is highly parallel and arguably immensely redundant 3. Being able to build circuit maps that are capable of capturing these parallels and redundancies is crucial.

You can see my loose constellation of thoughts spread across a few posts so far… Circuits: Beyond Structures which gives some of my axioms for “brain circuits”, Lesions and Symptoms which starts us thinking about how “brain circuits” can be more formalized wrt behavior, and Kuramoto and his circular dance which reveals a bit of where all of this is going… ↩︎

Linear thinking is everywhere in medicine. We tend to build a map/relationship using null hypothesis testing, then assume that map holds everywhere in the input space. This is so trivially wrong and trivially easy to show as such. ↩︎

One reason I think industry’s claims of AGI are nonsense is that industry builds “AI” hyperfocused on efficiency. They’re actively avoiding redundancy even though it’s a central feature of every single unequivocally intelligent system in the universe (thus far known). ↩︎